The powerful AI of AMD chips can finally be unleashed on Windows PCs

AMD’s material teams tried to redefine AI inference with powerful fleas like the Ryzen AI Max and the Threadripper. But in software, the company has been largely absent with regard to PCs. This changes, say the managers of AMD.

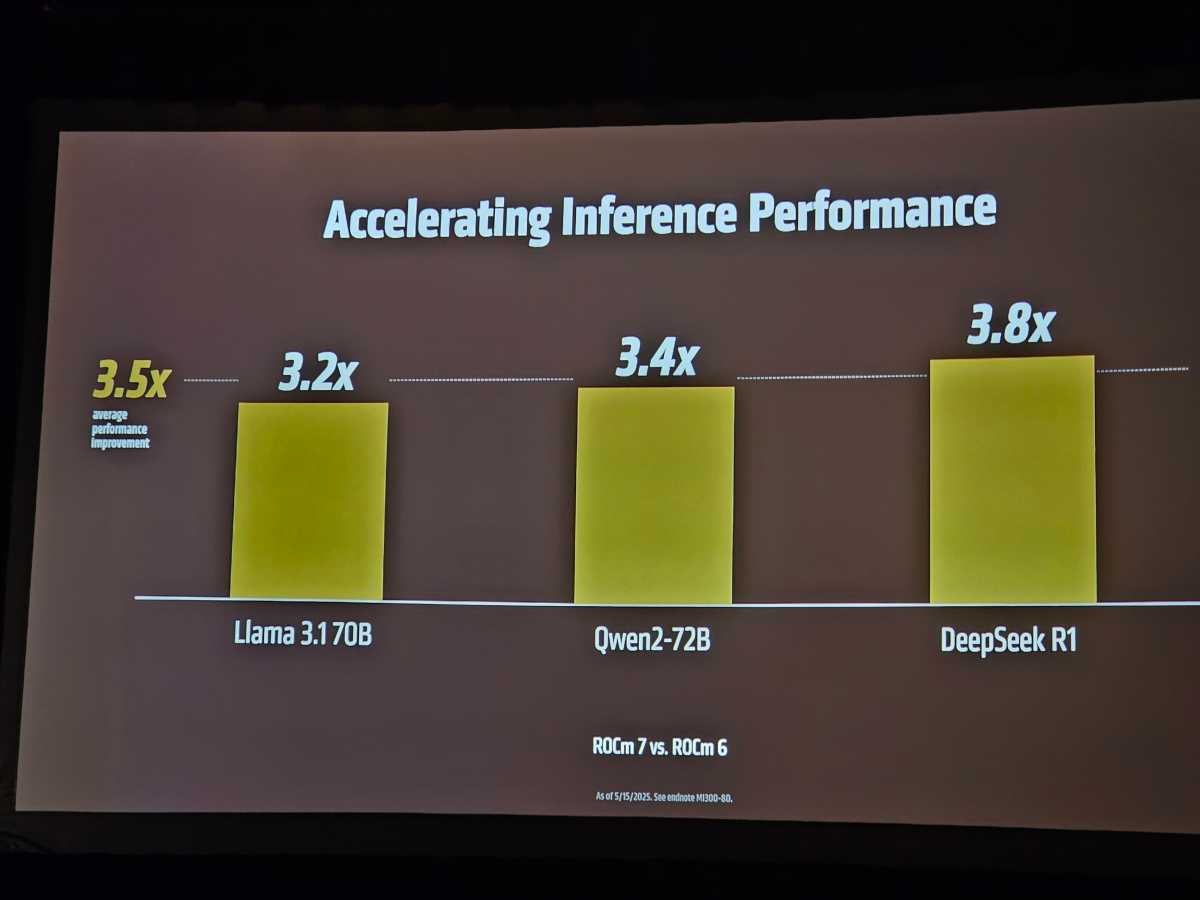

The AMD Advancing IA event on Thursday focused on corporate class GPUs as its instinct range. But it is a software platform that you may not have heard of, called ROCM, which AMD depends just as much. AMD today publishes ROCM 7, which, according to the company, can increase the inference of AI by three times through the software alone. And it is Finally Coming to Windows to fight Cuda supremacy from Nvidia.

Radeon Open Compute (ROCM) is AMD’s open software stack for AI computers, with drivers and tools to execute AI workloads. Do you remember the debacle of Nvidia GeForce RTX 5060 a few weeks ago? Without software pilot, Nvidia’s last GPU was a life of lifeless silicon.

At the beginning, AMD was in the same pickle. Without the unlimited corporations of companies like NVIDIA, AMD made a choice: it would favor large companies with ROCM and its corporate GPU instead of customer PCs. Ramine Roane, vice -president of the company of the Solutions AI group, described this a “painful point:” “We focused Rocm on the Cloud GPUs, but that was not always working on the final point – we therefore install it.”

Mark Hachman / Foundry

In today’s world, the simple fact of sending the best product is not always enough. Capturing customers and partners ready to engage in the product is a necessity. This is why Microsoft’s former CEO, Steve Ballmer sang the “developers of developer developers” on stage; When Sony built a Blu-ray player in the PlayStation, film studios have given the new video format a critical mass that the HD-DVD rival format did not.

Now Amd’s Roane has said that the company has realized that IA developers also like Windows. “It was a decision not to use resources to bring the software to Windows, but now we realize that, hey, the developers really care about this,” he said.

ROCM will be supported by Pytorch in preview in the third quarter of 2025 and by ONNX-EP in July, said Roane.

Presence is greater than performance

All this means that AMD processors will finally gain a much more important presence in AI applications, which means that if you have a laptop with an Ai Ryzen processor, an office with a Ryzen Ai Max chip, or an office with a Radeon GPU inside, it will have more opportunities to press AI applications. Pytorch, for example, is an automatic learning library that popular AI models such as the “transformers” of the embrace are in addition. This should mean that it will be much easier for AI models to enjoy Ryzen equipment.

ROCM will also be added to Linux distributions “in box” also: Red Hat (in the second half of 2025), Ubuntu (the same) and Suse.

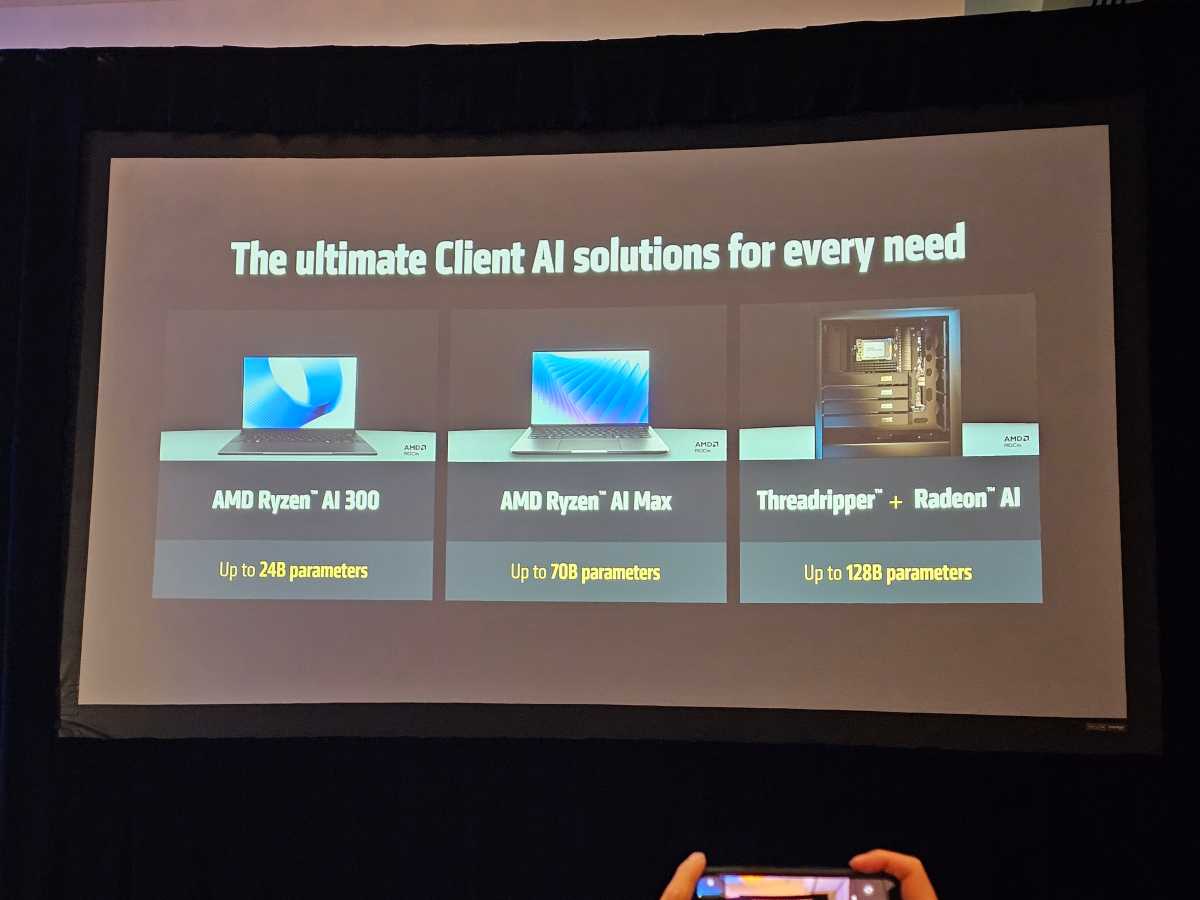

Roane also provided a little context on the size of the model that each AMD platform should be able to execute, from a Ryzen 300 notebook on a Threadripper platform.

Mark Hachman / Foundry

… but the performance improves considerably

The IA performance improvements that ROCM 7 adds are substantial: an improvement in the performance of 3.2x in Llama 3.1 70B, 3.4X in QWEN2-72B and 3.8x in Deepseek R1. (The “B” represents the number of parameters, in billions; the more the parameters are high, the more high quality of outputs.) Today, these figures count more than in the past, as Roane has declared that inference fleas show greater growth than the processors used for training.

(The “training” generates AI models used in products like Chatgpt or Copilot. “Inference” refers to the real process of using AI. In other words, you can form an AI to know everything about baseball; when you ask it if Babe Ruth was better than Willie Mays, you use inference.)

Mark Hachman / Foundry

AMD said that improving the ROCM battery also offered the same training performance, about three times the previous generation. Finally, AMD said that its own MI355X running the new ROCM software would surpass an NVIDIA B200 of 1.3x on the Deepseek R1 model, with precision with 8 -bit floating comma.

Again, the performance is important – in AI, the goal is to push as many AI tokens as quickly as possible; In games, they are polygons or pixels instead. Simply providing developers with a chance to enjoy the AMD equipment you already have is a winner-win, for you and AMD.

The only thing that AMD does not have is a consumer -focused application to encourage users to use AI, whether LLM, AI art or something else. Intel publishes Ai Playground, and Nvidia (although it does not have technology) worked with a third -party developer for its own application, LM Studio. One of the practical characteristics of the AI Playground is that each available model has been quantified or set for Intel equipment.

Roane said that there are similar models for AMD equipment such as the Ryzen Ai Max. However, consumers must go to benchmarks such as the face and download them themselves.

Roane called Ai Playground a “good idea”. “No specific plans at the moment, but it is certainly a direction that we would like to move,” he said, in response to a question from PCworld.com.